Introduction: A Double-Edged Sword

Artificial Intelligence is everywhere today—from chatbots that listen to our stress, trauma, worries and so on to apps that guide us through meditation, breathwork and other deep relaxation techniques. Many of us believe technology can make mental health care more accessible and personalized. But here is the paradox: the same technology that promises healing can sometimes harm us.

As Sherry Turkle, MIT professor and author of Alone Together, once said: “Technology proposes itself as the architect of our intimacies. But when we allow it to shape our relationships, we risk letting it define us.”

This tension—between help and harm—is what we call the AI Mental Health Paradox.

The Promise of AI in Mental Health

Let us begin with the positives. AI-driven tools have already made important contributions:

-

24/7 Availability: Chatbots and mental health apps provide support anytime, anywhere.

-

Accessibility: For people in remote areas or with financial constraints, AI tools make mental health care more reachable.

-

Early Detection: Algorithms can detect patterns in speech, sleep, or social media activity to flag early signs of depression or anxiety.

-

Personalization: Apps tailor breathing exercises, mindfulness practices, and coping strategies to our unique needs.

A study published in JMIR Mental Health (2023) found that AI chatbots reduced symptoms of anxiety and depression in users within four weeks of regular interaction. Clearly, there is potential.

When Help Turns Harmful

Despite these advantages, many of us are discovering that AI is not always the friend it promises to be. Here’s where the paradox deepens:

1. Over-Reliance on Chatbots

While AI can provide comfort, it cannot replace human empathy. Research by The Lancet Digital Health (2022) warned that over-reliance on AI chatbots may lead individuals to avoid seeking professional help, delaying effective treatment.

2. Privacy and Data Concerns

Mental health data is deeply personal. Yet many apps have been found sharing sensitive user data with advertisers. A report by Mozilla Foundation (2022) revealed that over 29 mental health apps had poor privacy practices, risking user trust and safety.

3. Generic or Inaccurate Advice

AI is only as good as its training data. In some cases, users have reported receiving shallow or even harmful advice. Imagine someone struggling with suicidal thoughts and receiving generic, scripted reassurance—it could do more harm than good.

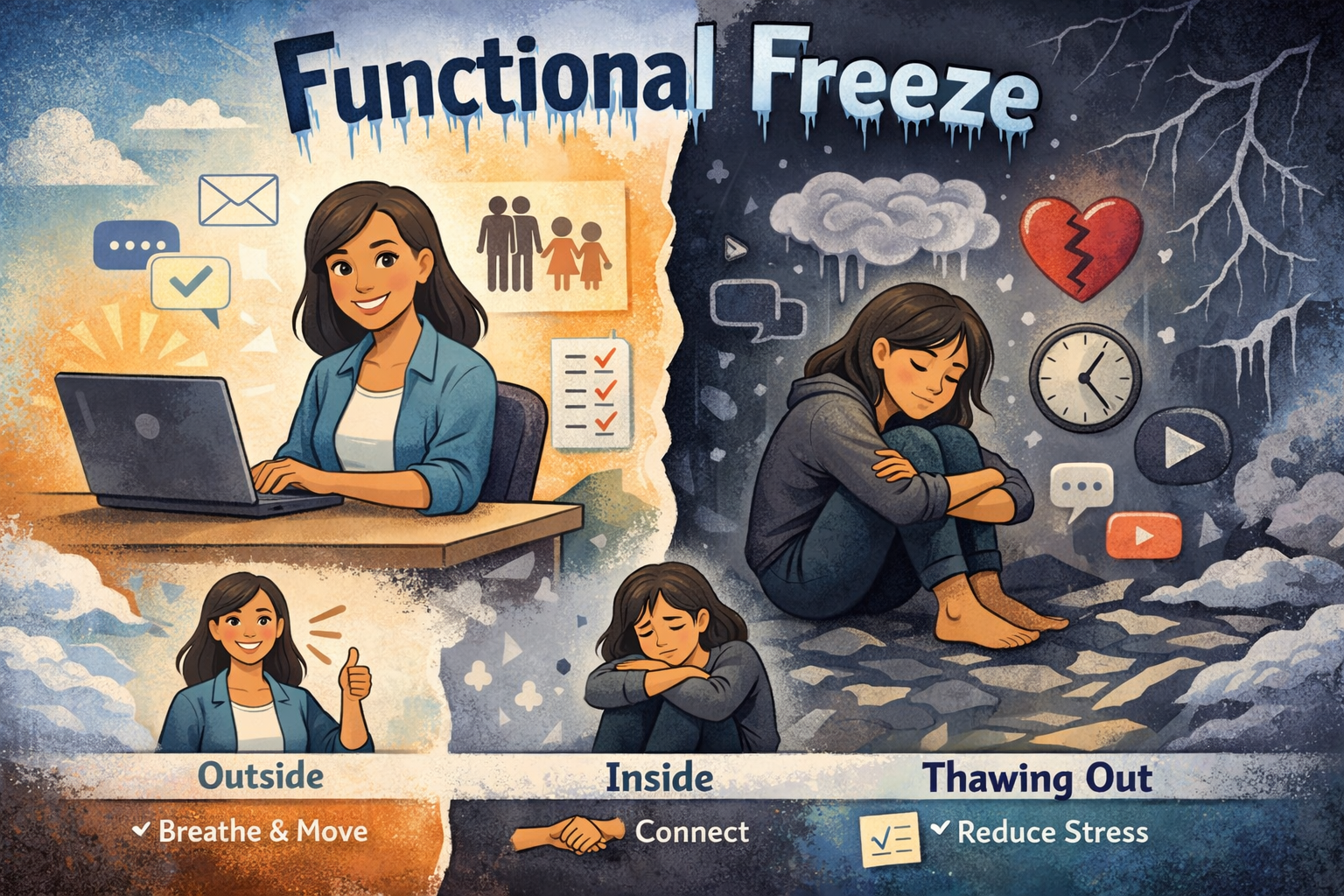

4. Screen Fatigue

Ironically, the very devices that deliver AI mental health support are also a major cause of mental strain. Excessive screen time, constant notifications, and digital overstimulation can worsen anxiety and burnout.

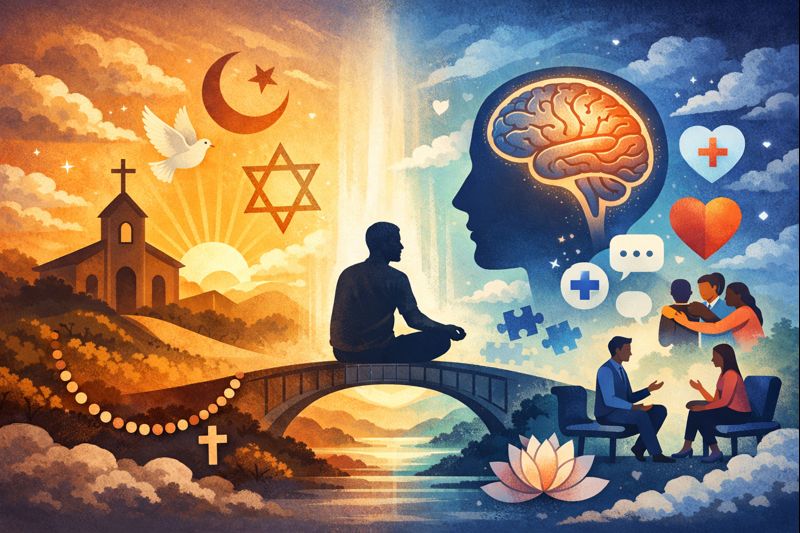

The Human Side of the Paradox

We need to remind ourselves that healing often comes through connection—with ourselves and with others.

As Viktor Frankl, the renowned psychiatrist, once said: “Life is never made unbearable by circumstances, but only by lack of meaning and purpose.”

No matter how advanced AI becomes, it cannot give us genuine meaning, empathy, or the warmth of human presence. Technology can guide us, but healing is still a profoundly human journey.

Balancing AI and Humanity

So how do we navigate this paradox? The answer is not to reject AI, but to use it wisely:

-

Use AI as a Tool, not a Therapist

We must remember that AI can support, but not substitute, professional care. It works best as a first step—to provide basic coping strategies or immediate support before we reach out to a human expert. -

Strengthen Privacy and Ethics

Stricter regulations and transparent practices are needed so that users can trust their private and intimate data is safe. -

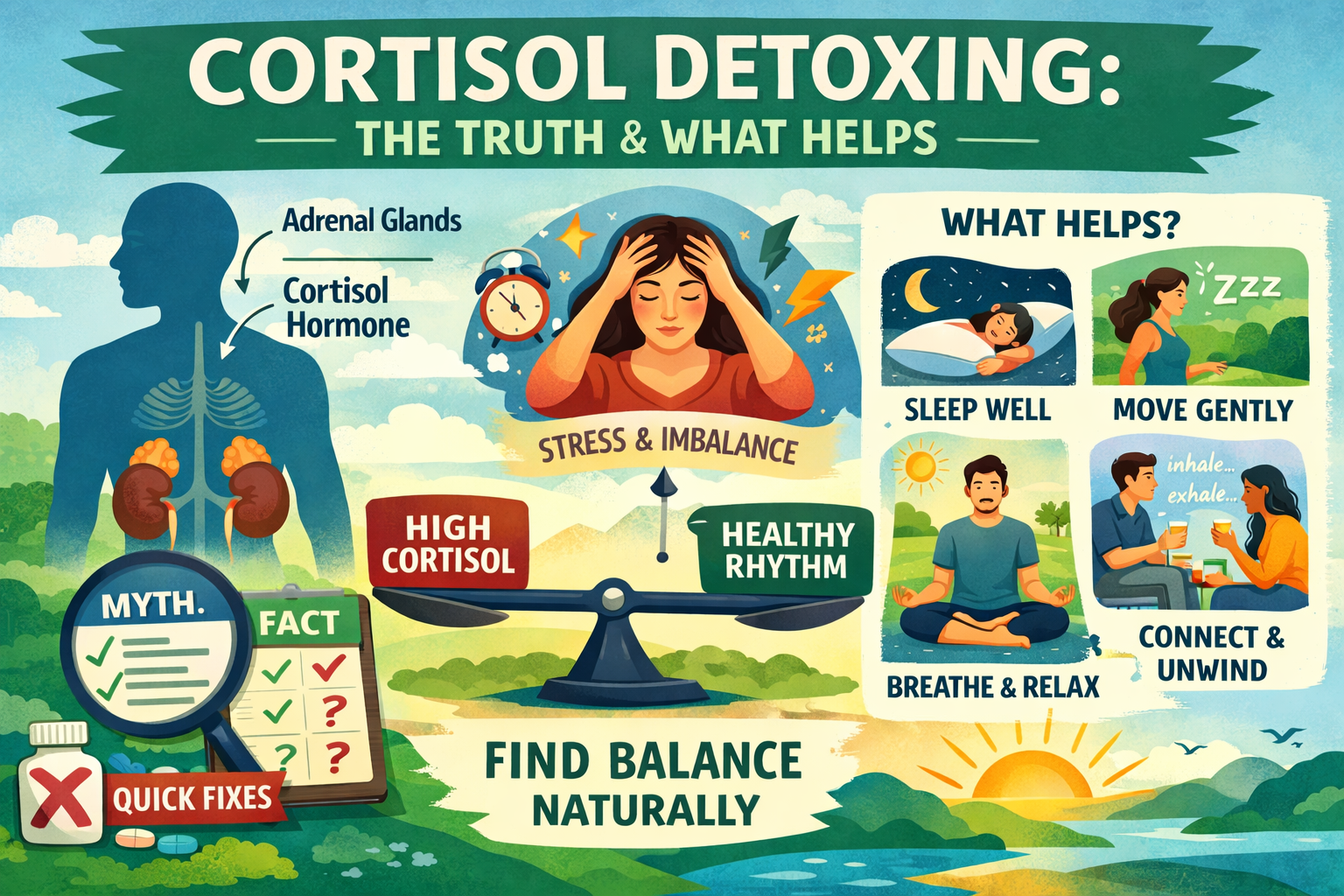

Encourage Digital Detox

Even as we use AI apps, we should balance them with offline activities. Walk daily in a garden or neighbourhood forest, practice deep breathing exercises, and engage in meaningful conversations with friends. -

Hybrid Models of Care

The future lies in combining AI tools with professional therapy. A psychologist supported by AI insights can give more accurate, timely, and compassionate care.

Conclusion: Walking the Middle Path

The AI Mental Health Paradox reminds us that technology is neither good nor bad—it is what we make of it. If we treat AI as a partner, not a replacement, we can unlock its benefits without falling into its traps.

We must keep asking: Does this tool make me feel more connected, more understood, more human? If the answer is no, it may be time to step back.

As Albert Einstein once said: “The human spirit must prevail over technology.”

Let us use AI to heal, but let us never forget that our deepest healing comes from human connection, purpose, and compassion.

Are you looking for inner peace, deep relaxation or holistic solutions for mental health? Visit http://themindtherapy.in - your space for online counselling/therapy, free mental health tests, meditation, sound therapy etc.

Mind Therapy is India's trusted platform for mental health, mindfulness, and holistic healing. Explore expert-led programs, guided meditation, sound therapy and counselling at http://themindtherapy.in